| Version 20 (modified by , 18 years ago) ( diff ) |

|---|

Experiment description

A single sender broadcast (ethernet broadcast) a 512B packet to 238 receivers running tcpdump. The wired interface eth1 was used on all senders and receivers. The number of packets sent and the time between two consecutive packets was varied at the sender.

Results

Metrics chosen were

- the number of packets successfully received,

- the percentage of missing packets and

- the time taken to receive all packets

on each receiving node.

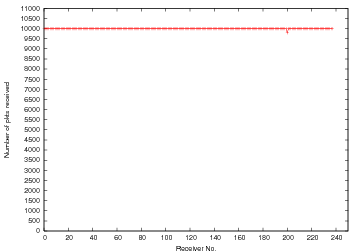

Experiment 1

10000 packets were sent with an interval of 10ms between consecutive packets. The figure above(on the left) shows the number of packets lost per receiver. From the figure, all receivers except for one (node14-3) receives all 10000 packets. The same behavior was exhibited in another run of the same experiment. This seems to indicate that there might be something wrong with node14-3. The figure (on the right) shows the number of packets successfully received at each receiver.

We also saw the same behavior when we used 5000 packets instead of 10000.

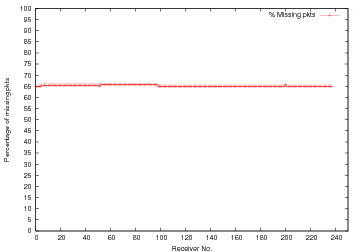

Experiment 2

10000 packets were sent "as fast as possible". There was no "sleep" statement between two send events at the sender. The figures above show the number of packets lost per receiver (on the left) and the number of successful packet receptions (on the right). It is unclear from current experiments as to where this loss is occurring. Some sources of this loss could be

- buffer (between user-space and kernel-space, between kernel and driver, between driver and card) overflows at the sender or receiver?

- the switches in the network cannot handle the load? Seems unlikely.

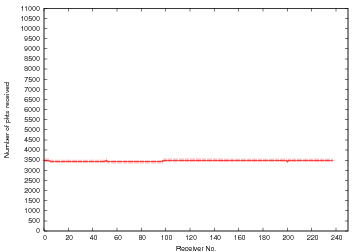

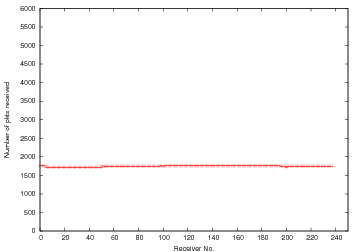

Experiment 3

5000 packets were sent "as fast as possible". There was no "sleep" statement between two send events at the sender. The figures above show the number of packets lost per receiver (on the left) and the number of successful packet receptions (on the right). It is unclear from current experiments as to where this loss is occurring.

Design Notes for nodehandler

Underlying model

- nodehandler broadcasts each command to all the nodes using a C program

System Parameters

- Underlying traffic model: Figure out

- how many packets

- of what size

- and with what interarrival are generated by a "typical" experiment script

- For this traffic model, test the performance in terms of packet loss, and avg. latency

Building reliability into the protocol

Some thoughts on building reliability into the protocol

- Cumulative ACK by nodeagent every 5 packets

- Stagger sending of ACK's to reduce the collision domains based on

N* backoff interval, where N = row no.

- nodehandler separate thread maintains a bitmap for all packets and fills a "one" for every missing packet reported by the nodeagent

- nodehandler broadcasts these missing packets after a timeout

- This timeout is based on the time taken by all nodes to send an ACK to the nodehandler using the convention of Step 2

Fetching OML xml schema

- Replace existing ruby webserver with proper Apache to serve xml files

- nodeagents still use wget to fetch these

Attachments (9)

- num-pkts-lost.png (4.4 KB ) - added by 18 years ago.

- num-pkts-recvd.png (4.5 KB ) - added by 18 years ago.

- num-pkts-lost.2.png (4.5 KB ) - added by 18 years ago.

- num-pkts-recvd.2.png (4.7 KB ) - added by 18 years ago.

- num-pkts-lost.3.png (4.6 KB ) - added by 18 years ago.

- num-pkts-recvd.3.png (4.7 KB ) - added by 18 years ago.

- total-time.png (5.0 KB ) - added by 18 years ago.

- total-time.2.png (4.1 KB ) - added by 18 years ago.

- total-time.3.png (4.0 KB ) - added by 18 years ago.

Download all attachments as: .zip